What is a RAG Agent?

Let's first define what an AI agent is. The important distinction from a simple program that an AI agent is a system capable of making decisions. It analyzes a situation and independently chooses the best course of action.

Standard chatbots are the simplest form of such agents. Their goal is to find an answer in a pre-prepared knowledge base. But if the information isn't there or is outdated, two fundamental limitations of basic large language models (LLMs) emerge:

- It is well-known that AI agents and LLMs, in general, are prone to hallucinations – the tendency to generate plausible but sometimes false events or answers that do not correspond to the real world.

- And outdated knowledge is a phenomenon where an LLM responds with information it was trained on. For example, if the dataset was collected in 2023, and today is 2025, the LLM knows nothing about events after 2023.

Retrieval-Augmented Generation (RAG) is an architecture designed to solve these problems.

Imagine giving your AI assistant a key to the corporate library and access to the internet. Before answering a question, it can consult these sources, find up-to-date information, and only then formulate a response.

Agentic RAG is the next step. It's not just about having access to the library, but having the intelligence to decide when and how to use it.

A RAG AI agent independently analyzes a request. If the question is simple ("What are your working hours?"), it will respond instantly. If it's complex ("Compare the features of your new product with a competitor's model announced yesterday"), it will understand that it needs to consult external sources.

FYI: Fintech company Klarna introduced own AI assistant powered by OpenAI – it covered two-thirds of Klarna’s customer service chats in first month. It was doing the equivalent work of 700 full-time agents. Estimated profit – $40 million USD.

The Evolution of AI Assistants

"Under the Hood": How We Build a RAG AI Agent

Creating such an agent may seem complicated, but modern tools allow you to assemble it like a construction set. Let's briefly go over the key stages without deep dive into the technical sides:

Step 1: Preparing the Workspace

Before hiring a digital "employee," you need to prepare its environment. In our case, we use the n8n framework, deployed in a Docker container.

n8n is a visual workflow builder where we can connect different blocks (AI modules, databases, APIs) into a single process without writing complex code.

We create a new workflow and add the first block – a Chat Trigger. This is the "front door" of our office: it receives messages from the user and triggers all subsequent logic.

Step 2: OpenAI Integration

Now our agent needs intelligence. We will use one of the large language models (LLMs) from OpenAI as its "brain." To do this, we add the OpenAI Agent block.

After adding the API key, we connect our "input" (Chat Trigger) to the "brain" (OpenAI Agent). Now, when a user types "Hello," the system will pass this message to OpenAI, which will generate a response, for example: "Hello! How can I help you?". Our basic chatbot is ready.

Teaching the Agent to Remember: From Short-Term Memory to Long-Term Knowledge

One of the main drawbacks of a basic chatbot is its lack of memory. Each of its responses is independent of the previous ones.

"Memory is the ability of an AI system to store, recall, and use past data and experiences."

Let's conduct an experiment.

We'll tell the bot: "My name is Oleksiy." It will reply: "Nice to meet you, Oleksiy!". But if we then ask, "What is my name?", it will say something like: "I'm sorry, I do not have access to personal information."

It has already forgotten the previous conversation.

To fix this, we add short-term memory. In our builder, this is the Memory Store block, which we configure to store the last 5 messages.

Now, when we repeat the dialogue, the agent will remember my name and profession, as this data is stored in the context of the current conversation.

This is critically important for business. A customer doesn't want to repeat their problem to every new operator, and similarly, they don't want to repeat it to a chatbot in every new message.

Of course, this is only memory for a single session. For the agent to remember a customer between conversations, long-term memory is needed – a vector database. This is a more complex technology that we will cover in future articles.

The Main Challenge for AI: Combating Outdated Knowledge and "Hallucinations"

Now that our agent has a "brain" and memory, let's solve the main problem – its limited knowledge. Let's demonstrate this with a simple example.

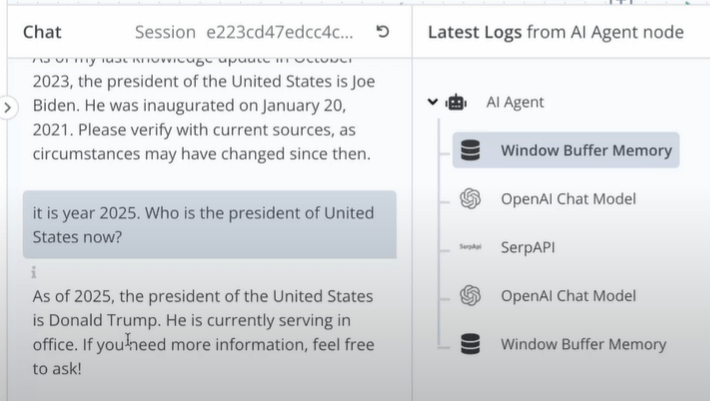

Let's ask our chatbot who the President of the United States is in 2025. It will answer:

"...My knowledge was last updated in October 2023. The President of the United States is Joe Biden."

This answer is false, as in 2025, the president is Donald Trump. The bot is not intentionally deceiving; it simply doesn't have access to newer information.

To solve this problem, we will give our RAG AI agent a new tool – internet access. For this, we integrate the SERP API.

SERP API – is a service that allows our program to make search queries on Google and receive the results in a machine-readable format.

We add this tool to our agent. Now, when we ask the same question: "It is 2025. Who is the president of the USA?", the following will happen:

- Agentic Logic: The AI agent analyzes the request and understands that its own knowledge (up to 2023) is outdated for providing an accurate answer.

- Decision-Making: It decides to use the tool available to it – an internet search.

- Action: The agent automatically formulates a search query and sends it via the SERP API.

- Response Generation: After receiving up-to-date information from the search, it generates the correct answer: "As of 2025, the President of the United States is Donald Trump."

This is the essence of the agentic RAG architecture: not just a blind search, but a conscious decision about the need to verify data.

How It All Works Together: A Demonstration of the Agentic RAG Architecture

Let's summarize the complete path of a query in our system:

- Trigger: A user sends a message.

- LLM (Brain): The language model analyzes the request.

- Memory: The agent checks the context of the current conversation.

- Decision: The agent decides whether its existing knowledge is sufficient or if it needs to use a tool.

- Tool (Search): If necessary, the agent queries the SERP API.

- Synthesis: The agent combines all the data (from memory, its own knowledge, and search results) and generates a final response.

- Response: The user receives accurate and up-to-date information.

Combining memory with internet access transforms a chatbot from a simple auto-responder into a full-fledged employee capable of solving real business tasks.

FYI: In its third-quarter 2024 financial report, Microsoft noted that revenue from Azure and other cloud services grew by 31%. The company stated that 7 percentage points of this growth were directly related to the demand for AI services.

Given that the cloud division is one of Microsoft's main sources of income, AI has managed to bring in billions of dollars in additional revenue in just one quarter.

Conclusion: From Routine Automation to Strategic Advantage

In this article, we've journeyed from a basic chatbot to a full-fledged RAG AI agent. We added memory to make conversations feel natural and provided internet access to ensure its knowledge is always current.

The choice between a standard chatbot and an agentic RAG is not a matter of "bad" versus "good." It is a matter of choosing the right tool for a specific business task.

- Standard chatbots remain an excellent solution for the mass automation of simple, repetitive processes.

- Agentic RAG becomes indispensable when a business needs flexibility, the ability to work with dynamic data, and the capacity to solve complex, unstructured problems.

Understanding this evolution allows companies to build a comprehensive AI strategy where each tool plays its role, delivering maximum value.

If you are ready to discuss which tasks in your business are ready to be taken to the next level of automation, book a consultation with CEO Alex Gurbych.